Puppeteer: Exploring Intuitive Hand Gestures and Upper-Body Postures for Manipulating Human Avatar Actions

VRST 2022 Paper

Ching-Wen Hung, Ruei-Che Chang, Hong-Sheng Chen, Chung Han Liang, Liwei Chan, Bing-Yu Chen

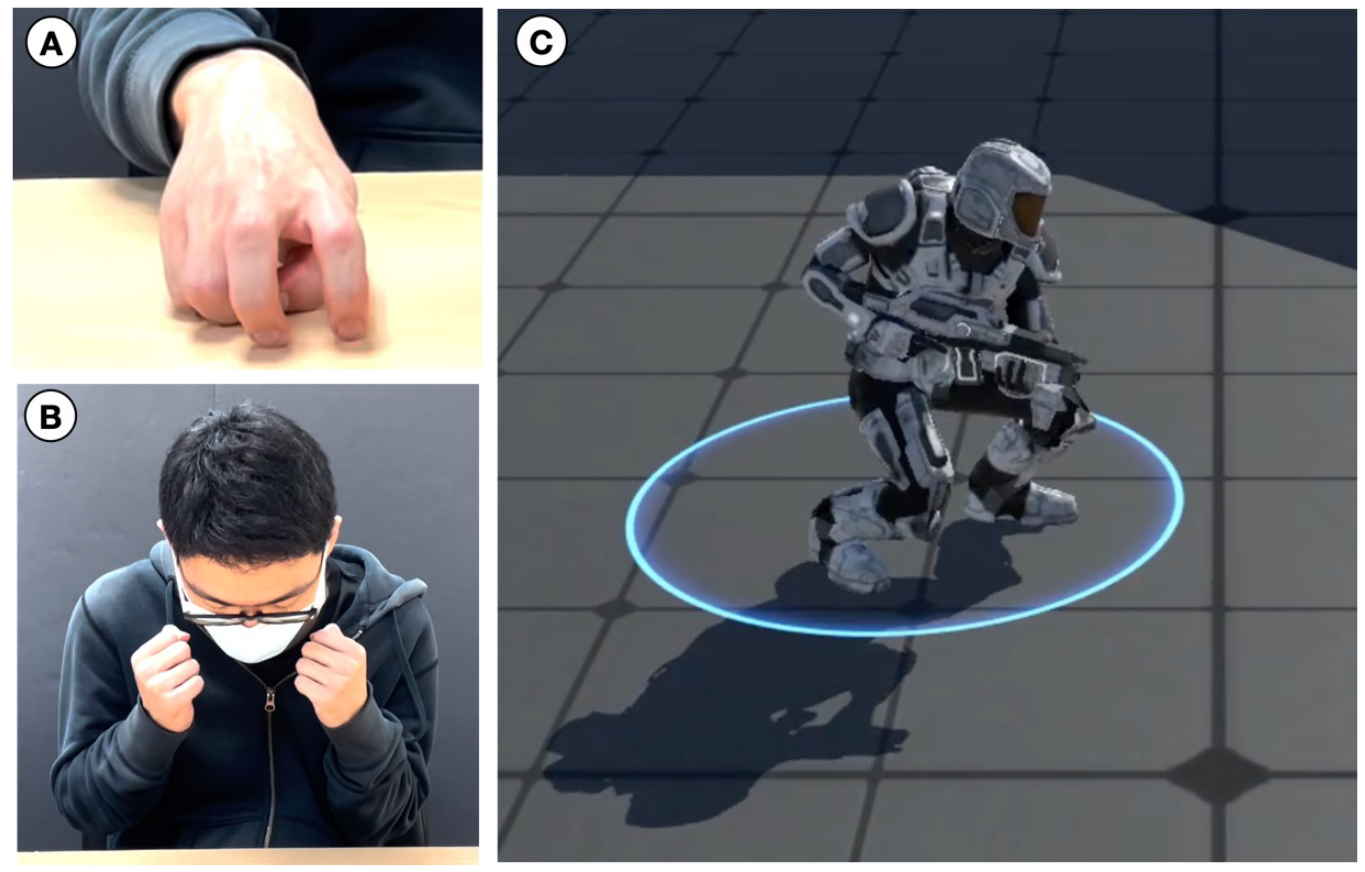

Body-controlled avatars provide a more intuitive method to realtime control virtual avatars but require larger environment space and more user effort. In contrast, hand-controlled avatars give more dexterous and fewer fatigue manipulations within a close-range space for avatar control but provide fewer sensory cues than the body-based method. This paper investigates the differences between the two manipulations and explores the possibility of a combination. We first performed a formative study to understand when and how users prefer manipulating hands and bodies to represent avatars’ actions in current popular video games. Based on the top video games survey, we decided to represent human avatars’ motions. Besides, we found that players used their bodies to represent avatar actions but changed to using hands when they were too unrealistic and exaggerated to mimic by bodies (e.g., flying in the sky, rolling over quickly). Hand gestures also provide an alternative to lowerbody motions when players want to sit during gaming and do not want extensive effort to move their avatars. Hence, we focused on the design of hand gestures and upper-body postures. We present Puppeteer, an input prototype system that allows players directly control their avatars through intuitive hand gestures and upperbody postures. We selected 17 avatar actions discovered in the formative study and conducted a gesture elicitation study to invite 12 participants to design best representing hand gestures and upperbody postures for each action. Then we implemented a prototype system using the MediaPipe framework to detect keypoints and a self-trained model to recognize 17 hand gestures and 17 upper-body postures. Finally, three applications demonstrate the interactions enabled by Puppeteer.